Human-robot collaboration is superior to fully-automated or fully-manual solutions in terms of flexibility, cost, and efficiency, says Scott Denenberg.

Denenberg, is the co-founder and chief architect of Veo Robotics, an innovative US-based company building the next generation of collaborative robotics systems.

Using advanced computer vision, 3D sensing and AI, Veo Robotics is enabling standard industrial robots to be used in collaborative workcells with humans.

In this article, Denenberg reveals how human-robot collaboration can be achieved ...

Robots and machines, in their straight-out-of-the-box state, are dumb. Humans, on the other hand, have evolved five finely-honed senses and brains that process stimuli from those senses, which allow us to fluidly interact and communicate with our surroundings.

Think about, for example, two line cooks working side by side in a narrow kitchen: one washes and chops, while the other preps and

Constantly, the two chefs size each other up: who’s holding the hot pan, who’s holding a sharp knife, who needs to reach that high shelf?sautés ingredients to be plated onto larger dishes in the kitchen. Each of them moves back and forth reviewing tickets and reaching for ingredients based on the orders at hand.

Without saying a word, they signal through body language and agree on who stops and who continues, and where and how that should play out. Subconsciously, they are collecting and classifying 3D visual, aural, and tactile signals, and outputting a set of simple actions: acknowledge, stop, shift right, shift left, allow passage, and continue trajectory. All of this happens in a split second and results in (mostly) error-free, fluid collaboration.

Robots focus on a sense of touch

Now consider the case of a human and a robot in a workcell. If we want them to safely collaborate at all (never mind even getting close to the way two humans can intuitively adjust around each other), we need to give the robot some way of sensing the world, processing the information it collects, and generating a collaborative action.

Roboticists continue to consider the best modes of safe collaboration, but the first class of collaborative robots focus on one of the senses – our sense of touch – as the means for communication and collaboration between robots and humans.

Power and Force Limited standard (PFL, from ISO 10218 and ISO/TS 15066) robots are a class of robots that “collaborate” with humans by sensing contact. Touching the robot is a trigger signal for the robot to stop and avoid harm to its human collaborator. This is a crude solution (similar to the way many of us parallel park – hitting the bumper of the car behind us means we should stop), but it’s still effective for many applications.

Upgrading beyond touch

A better solution would be upgrading robot sensing capabilities beyond touch. A sophisticated vision system would allow a robot to “see” its environment and the humans in it and react accordingly in a safe and collaborative way. This would allow the implementation of the Speed and Separation Monitoring standard (SSM from ISO 10218 and ISO/TS 15066), which basically says: “I, the robot, see you, and I will stop moving before I hit you.”

While not quite comparable to the level of communication between two cooks in a tight kitchen, this would at least be a couple of steps beyond the “you touched me so I’ll stop” collaboration mode.

The problem is, this vision system (which doesn’t have to “see” the way humans “see” – it could use infrared, UV, sonar, or even radar) has to be sophisticated enough to not only detect, map, and classify what it’s “seeing,” but also predict the trajectory of what it’s seeing.

Falling short along several dimensions

Although many new technologies like 2D LIDAR do give robots the ability to “see” their environments, they fall short along several dimensions and do not provide the richness of data needed to implement the SSM standard. 2D LIDAR only scans one plane at a time – if a 2D LIDAR setup guarding an industrial robot detects something a few meters away, it will have to stop the robot completely because the intrusion could be a person’s legs, which means that that person’s arms could be much closer.

However, newly available (and increasingly less costly) sensors providing 3D depth information, like 3D time-of-flight cameras, 3D LIDAR, and stereo vision cameras, can detect and locate intrusions into an area with much more accuracy.

If a 3D system guarding a robot detects a person’s legs a few metres away, it will allow the robot to continue to operate until that person actually stretches out their arm toward the robot.

This enables a much closer interlock between the actions of machines and the actions of humans, which means industrial engineers will be able to design processes where each subset of a task is appropriately assigned to a human or a machine for an optimal solution.

Such allocation of work maximizes workcell efficiency, lowers costs, and keeps human workers safe.

Building a new 3D sensing system

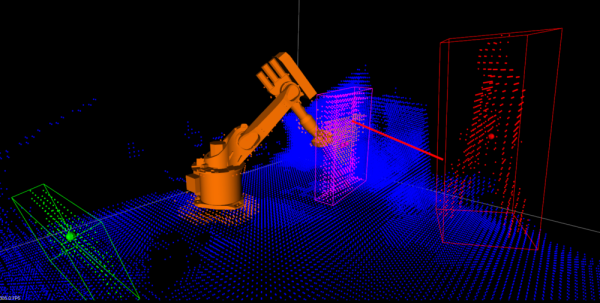

Technical and market challenges to the development and implementation of this type of system abound, but at Veo, we’re building a 3D sensing system that implements the Speed and Separation Monitoring standard using custom 3D time-of-flight cameras and computer vision algorithms.

In order to give industrial robots the perception and intelligence they need to collaborate safely with humans, our system measures the necessary protective separation distance as a function of the state of the robot and other hazards, the locations of operators, and parameters including other robot safety functions and system latency. If the protective separation distance is violated (for example, by a human arm), a protective stop occurs.

The Veo system perceives the state of the environment around the robot at 30 frames per second in 3D. As [Clara Vu, co-founder and VP of engineering put it], the Veo system determines all possible future states of a robot’s environment, anticipates possible undetected items and monitors for potentially unsafe conditions. It then communicates with the robot’s control system to slow or stop the robot before it comes in contact with anything it’s not supposed to contact.

The Veo system perceives the state of the environment around the robot at 30 frames per second in 3D. As [Clara Vu, co-founder and VP of engineering put it], the Veo system determines all possible future states of a robot’s environment, anticipates possible undetected items and monitors for potentially unsafe conditions. It then communicates with the robot’s control system to slow or stop the robot before it comes in contact with anything it’s not supposed to contact.

This means robots equipped with our system can safely work in close quarters with humans in industrial manufacturing settings – a material step in the direction of two line cooks in a tight kitchen.

About the author

Scott Denenberg is co-founder and chief architect of Massachusetts-based Veo Robotics.

Denenberg is a member of the US and international standards committees for ISO 10218 and ISO/TS 15066 on collaborative robotics. Previously, he led the development of sensors for applications in oil and gas, aerospace, and defence at Jentek Sensors. He holds a B.S. in EECS from Harvard and a PhD in Electrical Engineering from the University of Illinois at Urbana-Champaign.

This article was originally published by Veo Robotics.