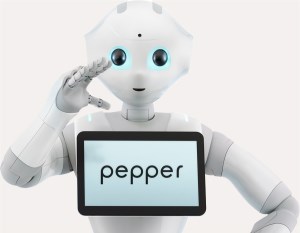

SoftBank Robotics’ Pepper humanoid robot will soon expand its emotional intelligence to understand more complex cognitive states and expressions, thanks to a new partnership with AI specialists Affectiva.

Pepper can already recognise human emotions like joy, anger, or surprise – but he’s now set to take a quantum leap to the next level.

Affectiva’s Emotion AI will be integrated into Pepper, giving the robot the ability to “detect highly nuanced states based on people’s facial and vocal expressions” in real time, the companies say.

While Pepper can already detect emotions via its microphones, cameras, and loudspeakers, the inclusion of the Emotion AI technology will allow it to detect more advanced states.

For example, Pepper will be able to identify cognitive states such as distraction, drowsiness, and “differentiating between a smile and a smirk.

Understanding these more complex states will give Pepper more meaningful interactions with people, says Affectiva.

That means Pepper will be able to adapt its behavior “to better reflect the way people interact with one another.”

Next generation of human-machine interaction

Pepper has already played a customer service role in banks, retail stores, airports, museums and hotels around the world.

Through the new partnership he will be able to expand his companion and concierge abilities and take on new roles.

“But this is only the beginning, especially as Pepper continues to evolve and learns to relate to people in increasingly meaningful ways,” says Marine Chamoux, affective computing roboticist at SoftBank Robotics.

“The partnership really signifies the next generation of human-machine interaction, as we approach a point where our interactions with devices and robots like Pepper more closely mirrors how people interact with one another.”

Mutual trust between humans and robots

Dr Rana el Kaliouby, co-founder and CEO of Affectiva, said there would be a need for deeper understanding and mutual trust between humans and robots as the machines take on more interactive roles in healthcare, homes, and retail environments.

“Just as people interact with one another based on social and emotional cues, robots need to have that same social awareness in order to truly be effective as co-workers or companions,” el Kaliouby said.

Affectiva uses machine learning, deep learning, computer vision, and speech science for its emotional intelligence technology, which the company calls Emotion AI.

The company said it has the “world’s largest emotion data repository,” with more than 7 million faces analysed across 87 countries. It is also working with the automotive industry to provide multi-modal driver state monitoring and in-cabin mood sensing.

Source: Robotics Business Review