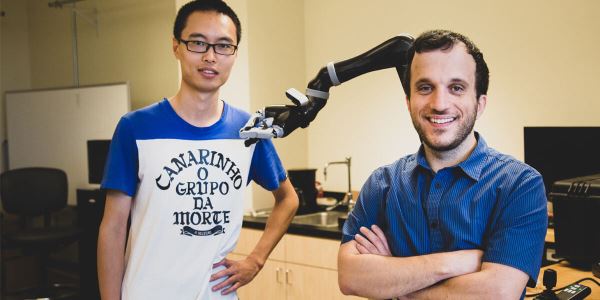

A study conducted by a team of computer scientists from the University of Southern California has found that robot systems learn better when humans act in an adversarial manner towards them or show them some “tough love”.

In the study, Robot Learning via Human Adversarial Games, researchers found that training a robot with a human adversary significantly improved its grasp of objects and robustness in a computer-simulated manipulation task.

“This is the first robot learning effort using adversarial human users,” says Assistant Professor of Computer Science and study co-author Stefanos Nikolaidis.

“Picture it like playing a sport: if you’re playing tennis with someone who always lets you win, you won’t get better. Same with robots. If we want them to learn a manipulation task, such as grasping, so they can help people, we need to challenge them.”

The experiment

The initial experiment set up entailed a computer simulation, a robot and a human observer at a computer. The robot attempts to grasp an object and if successful the observing human tries to snatch the object from the robot’s grasp, using the keyboard to signal direction.

This added challenge helps the robot learn the difference between a weak grasp and a firm grasp. The robot system rejected unstable grasps and adopted more robust grasps, which made it harder for the human adversary to snatch away.

In an experiment, the model achieved a 52 per cent grasping success rate with a human adversary versus a 26.5 per cent success rate with a human collaborator.

“The robot learned not only how to grasp objects more robustly, but also to succeed more often in with new objects in a different orientation, because it has learned a more stable grasp,” says Professor Nikolaidis.

The study also found that a robot that trained with a human adversary performed better than a simulated adversary, which had 28 per cent grasping success rate. “Humans can understand stability and robustness better than learned adversaries,” explains Pofesssor Nikolaidis.

“The robot tries to pick up stuff and, if the human tries to disrupt, it leads to more stable grasps. And because it has learned a more stable grasp, it will succeed more often, even if the object is in a different position. In other words, it’s learned to generalize. That’s a big deal.”

Professor Nikolaidis and his team use reinforcement learning, a technique in which artificial intelligence programs “learn” from repeated experimentation. The robotic system learns based on previous examples. In theory this increases the range of tasks it can perform.

However, the robotic systems need to see a vast number of examples to learn how to manipulate an object with human-like dexterity. Without extensive training, it can’t pick up an object, manipulate it with another grip, or grasp and handle a different object.

Improving a robot’s dexterity is vital if assistive robotic devices, such as grasping robots, are to be used to help people with disabilities. Robotic systems must be able to operate reliably in real-world environments.

Research has found that human input when training robotic systems is successful. The human provides feedback to the robotic system by demonstrating the ability to complete the task. However, the algorithms have made the assumption of a cooperating human supervisor assisting the robot.

“I’ve always worked on human-robot collaboration, but in reality, people won’t always be collaborators with robots in the wild,” says Professor Nikolaidis.

The computer science team wanted to take the approach where instead of helping the robot, the human would make things harder for it. The thinking goes that the added challenge would help the system learn to be more robust to real world complexity.

Future of robot learning

Professor Nikolaidis hopes to have the system working on a real robot arm within a year. This presents the challenge of the real world where slight friction or noise in a robot’s joints can throw things off.

However, Professor Nikolaidis is hopeful about the future of adversarial learning for robotics. “I think we’ve just scratched the surface of potential applications of learning via adversarial human games,” he says.

“We are excited to explore human-in-the-loop adversarial learning in other tasks as well, such as obstacle avoidance for robotic arms and mobile robots, such as self-driving cars.”

Professor Nikolaidis believes that the future of robot learning lies in finding a balance of tough love and encouragement with robotics.

“I feel that tough love – in the context of the algorithm that we propose – is again like a sport: it falls within specific rules and constraints,” says Nikolaidis.

“If the human just breaks the robot’s gripper, the robot will continuously fail and never learn. In other words, the robot needs to be challenged but still be allowed to succeed in order to learn.”

Robot learning in Australia

An Australian Centre for Robotic Vision project has also taken strides in robot training. The project uses an active perception approach to focus on real-time by stepping away from a static camera position or fixed data collecting routines.

“The idea behind it is actually quite simple,” says PhD Researcher Doug Morrison. “Our aim at the Centre is to create truly useful robots able to see and understand like humans.

“So, in this project, instead of a robot looking and thinking about how best to grasp objects from clutter while at a standstill, we decided to help it move and think at the same time,” continues Mr Morrison.

“A good analogy is how we ‘humans’ play games like Jenga or Pick Up Sticks,” says Mr Morrison. “We don’t sit still, stare, think, and then close our eyes and blindly grasp at objects to win a game. We move and crane our heads around, looking for the easiest target to pick up from a pile.”

The system builds up a map of grasps in a pile of objects, which continually updates as the robot moves. This real-time mapping predicts the quality and pose of grasps at every pixel in a depth image, all at a speed fast enough for closed-loop control at up to 30Hz.

According to Mr Morrison, this active approach is smarter and up to ten times faster than static, single viewpoint grasp detection methods.

“We strip out lost time by making the act of reaching towards an object a meaningful part of the grasping pipeline rather than just a mechanical necessity,” says Mr Morrison. “Like humans, this allows the robot to change its mind on the go in order to select the best object to grasp and remove from a messy pile of others.”

The active perception approach has been tested in “tidy-up” trials at the Centre for Robotic Vision’s lab at the Queensland University of Technology. The trials involved a robotic arm to removing 20 objects, one at a time, from a pile of clutter.

Using this approach, the robotic arm achieved an 80 per cent success rate when grasping in clutter – up 12 per cent on traditional single viewpoint grasp detection methods.

Next steps

Next, Mr Morrison wants to fast-track how a robot learns to grasp physical objects by opting for the use of weird, adversarial shapes over mundane household objects. He explains that training robots to grasp on ‘human’ items is not efficient or beneficial for a robot.

“At first glance a stack of human household items might look like a diverse data set, but most are pretty much the same. For example, cups, jugs, flashlights and many other objects all have handles, which are grasped in the same way and do not demonstrate difference or diversity in a data set,” points out Mr Morrison.

“We’re exploring how to put evolutionary algorithms to work to create new, weird, diverse and different shapes that can be tested in simulation and also 3D printed.

“A robot won’t get smarter by learning to grasp similar shapes. A crazy, out-of-this world data set of shapes will enable robots to quickly and efficiently grasp anything they encounter in the real world.”