Nividia has introduced the first unified computing platform for both artificial intelligence and high performance computing.

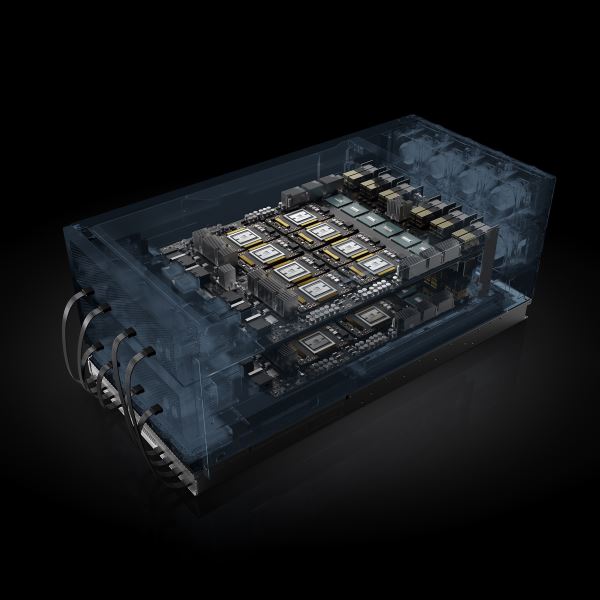

The Nividia HGX-2TM cloud server platform, with multi-precision computing capabilities, provides unique flexibility to support the future of computing.

It allows high-precision calculations using FP64 and FP32 for scientific computing and simulations, while also enabling FP16 and Int8 for AI training and inference.

The new server has been designed specifically to benefit artificial intelligence and data processing industries.

“The world of computing has changed,” said Jensen Huang, founder and chief executive officer of Nvidia.

Speaking at the recent GPU Technology Conference Taiwan, Mr Huang said CPU scaling has slowed at a time when computing demand is skyrocketing.

“Nvidia’s HGX-2 with Tensor Core GPUs gives the industry a powerful, versatile computing platform that fuses HPC and AI to solve the wod’s grand challenges.”

Record AI training speeds

HGX-2-serves as a “building block” for manufacturers to create some of the most advanced systems for HPC and AI. It has achieved record AI training speeds of 15,500 images per second on the ResNet-50 training benchmark and can replace up to 300 CPU-only servers.

It incorporates such breakthrough features as Nvidia NVSwitchTM interconnect fabric, which seamlessly links 16 NVIDIA Tesla® V100 Tensor Core GPUs to work as a single, giant GPU delivering two petaflops of AI performance. The first system built using HGX-2 was the recently announced NVIDIA DGX-2TM.

HGX-2 comes a year after the launch of the original Nvidia HGX-1, at Computex 2017.

The HGX-1 reference architecture won broad adoption among the world’s leading server makers and companies operating massive data centres, including Amazon Web Services, Facebook and Microsoft.

New systems planne

Four leading server makers – Lenovo, QCT, Supermicro and Wiwynn – announced plans to bring their own HGX-2-based systems to market later this year.

Also, four of the world’s top original design manufacturers (ODMs) – Foxconn, Inventec, Quanta and Wistron – are designing HGX-2-based systems, also expected later this year, for use in some of the world’s largest cloud data centres.

HGX-2 is a part of the larger family of Nvidia GPU-Accelerated Server Platforms, an ecosystem of qualified server classes addressing a broad array of AI, HPC and accelerated computing workloads with optimal performance.

Supported by major server manufacturers, the platforms align with the data centre server ecosystem by offering the optimal mix of GPUs, CPUs and interconnects for diverse training (HGX-T2), inference (HGX- I2) and supercomputing (SCX) applications.

Users can choose a specific server platform to match their accelerated computing workload mix and achieve best-in-class performance.

More information at http://nvidianews.nvidia.com/